TL;DR: There are a lot of small things which you can do to improve translation reuse, but they’d have to be applied from the beginning of the project.

If you happen to have one product ready and translated, whereas the other(s) need translations, I advise you to sign up to POEditor. It is a very good product and you can tell the guys know what they’re doing. During a free trial, you can import the existing translation from one product, and reuse them in another product thanks for the translation memory, with very low effort. It supports XLIFF, Android XML, JSON and a few other file formats. The drawbacks are that its TM only finds exact matches, and after the trial, the pricing ain’t cheap if you have many strings and languages (that’s one of the reasons we didn’t buy the subscription).

In order to achieve good results though, it’s worth if you read on, to avoid some problems which impede string reuse.

Contrary, if you’re just starting with your projects, I advise you to:

- follow this guideline, and set up some processes to allow translation units reuse in the future,

- translate only one app first, and iterate on the translations until they’re good enough,

- when first app translation is done, sign up for POEditor or find other translation memory tool, and export the translations into the remaining products.

Introduction

Software translation and localization is a difficult subject. It seems deceptively simple, but as a Polish proverb says, “the farther into the forest, the more trees”. There’s a big number of people involved (developers, product managers, translation agency, translators) and it may take many iterations to obtain good results (this is a subject for a whole separate blog post).

Whereas there are many resources in the web on the software translation best practices, there are very few about how to make multiple projects in disparate technologies share the translations. Translations reuse is not straightforward, because each software platform and framework has a different attitude to the problem; but since the translation agencies very often simply do not do good work, it’s important to aim for high translation reuse once good translations are available.

In this blog post, I will write about the task I had last month, which was to localize an Android app and reuse as much translations as possible from existing iOS and web apps. I will discuss the pain points to be aware of, and provide some solutions - but there is no silver bullet.

Best practices

First I will start with two (of the many) best practices (BP) of software translations. I will refer to a string to be translated as a “translation unit”.

Best practice 1: don’t concatenate strings and variables, use a template string with placeholder

When dynamic data has to be put in the UI string, always use translations unit with a placeholder, like

Delete %s from contact list?, and replace the placeholder in the code.

Each language has a well-defined order of the sentence. In German, verbs often go at the end of the sentence. Concatenating strings doesn’t make sense; whole sentence has to be translated, and placeholder correctly located by the translator.

Best practice 2: always include punctuation in the translation unit

If the UI string looks like

Foobar:, the translation unit must beFoobar:, notFoobar.

You might think you could just add the colon in the code or UI template, but it’s not correct.

The grammar rules of French language say that characters like :, ;, !, ? must be preceded

by a single whitespace. So the French translation of Foobar: will be Foobar :.

Real life

An important realization is that software translation is not a one-off thing, but a continuous process, which consists of:

- Initial translation,

- Adding new strings (and hence the new translations),

- Updating existing strings (and hence also the translations).

This alone makes it tricky and complex to keep things in sync, but translation reuse is more difficult than it should be mostly due to a different way each major platform handles it.

Different conceptual attitude on iOS and Android

In Android, a standard is to externalize the translation units into strings.xml file in

an appropriate subfolder of the project, one per language (including the primary language).

On the web, the standard file format for data is now JSON, so naturally it’s also common to use it

for the translations (at least in JavaScript-powered Single-Page Applications).

Projects like angular-translate popularized this approach: have one JSON

per language (including the primary language), loaded dynamically at runtime, and then passed to

the framework to populate the strings in the UI. From translation point of view, this is quite

similar to the Android approach, though there are some technical differences.

In iOS, the approach is quite different. A standard practice is that you actually DO hardcode

the strings in the code, in the primary language of the app, but you wrap them in a NSLocalizedString call.

Then, you can use Xcode to analyze the project,

and generate a set of XLIFF files for the translation agency (one per each language, except

the primary language), and when you get the translations back, you import them to the project to

a set of Localizable.strings files.

At the very beginning of the project, I was thinking about having a shared source-of-truth git repo with all the strings in the primary language, their translations, and some tooling on top of that, which would build platform-specific files; but due to the complexity coming from the fact of iOS being much different than the rest, and the apps evolving at different pace (which could turn problematic at some point - though could be solved with git branches), this idea was abandoned.

Enter the translation memory

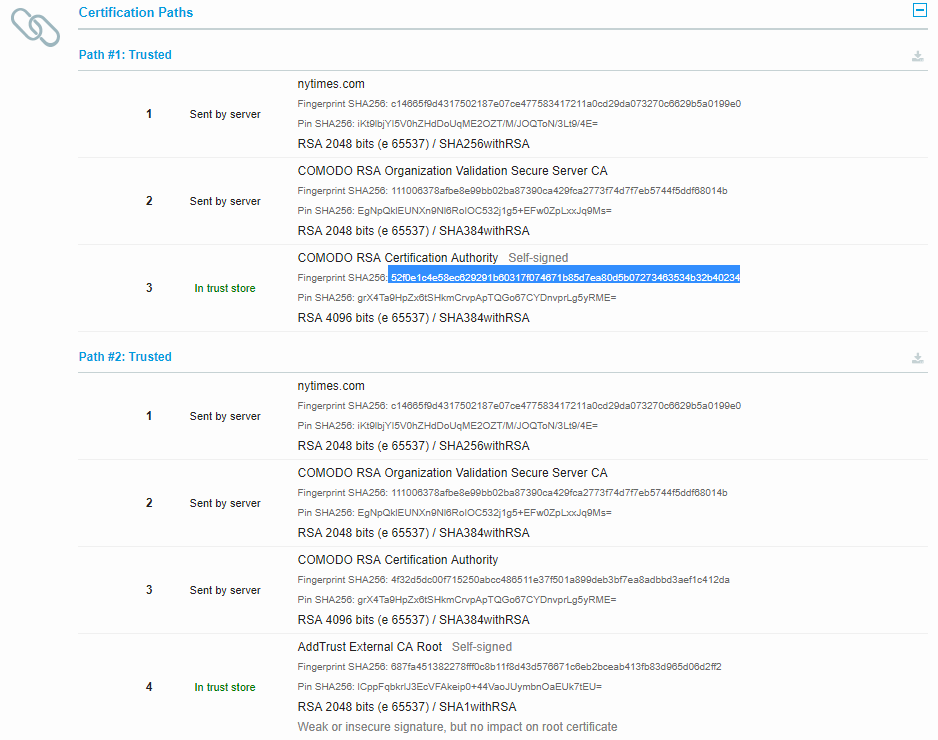

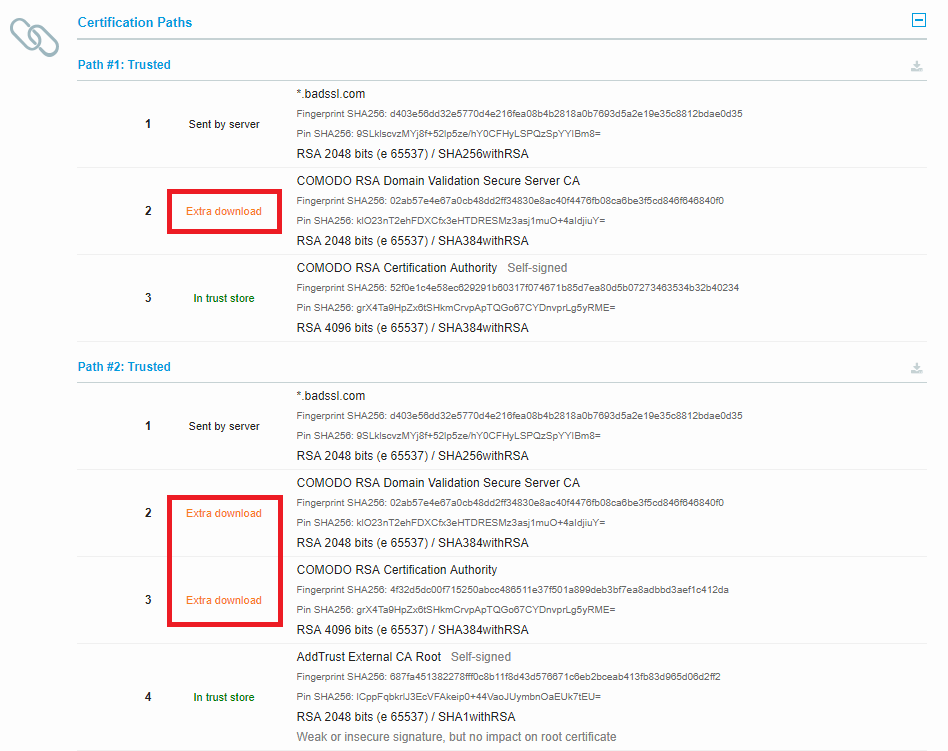

“Translation memory” (TM) is an opposite approach to “shared source-of-truth”: each platform remains independent, but it can pull translations from the shared pool. This is something that professional translation agencies use, but you can also find some free software doing that, or roll your own.

The way TMs work is simple: you push your translation units and respective translations to it, and then when you need to translate the same or similar translation unit from a different project, the TM finds a match.

Basic TMs can only find exact matches; more advanced TMs can find fuzzy matches, when the inputs vary only slightly. The problem is that there can be many small differences in the translation units due to a number of reasons I will present below.

Small but important impediments to translation unit reuse

Slightly different inputs

Mostly due to punctuation differences (!, : etc.), as previously explained in BP2:

Book nowvsBook now!are different translation units.

This can also happen due to:

- extra whitespace,

- different whitespace (regular vs non-breaking whitespace),

- differing apostrophes and quotes (regular ASCII vs. fancy ones).

Good TMs can handle it and find a fuzzy match, but it still might require manual intervention to align the translations.

Regarding the apostrophes and quotes, it might be interesting to have tooling in place to normalize them; for example to replace the ASCII ones with “fancy” ones (U+2019 right single quotation mark, U+201C and U+201D left/right double quotation mark). This has a few advantages:

- the fancy apostrophes and quotes don’t need to be

\-escaped on Android, - different translators throughout the time might use one or the other, which could lead to UI inconsistencies,

- guaranteed consistency between the platforms.

Non-breaking space (U+00A0) looks like a regular space in any code editor, hence it’s difficult to spot - beware.

Side rant: Translation agencies often use TMs with fuzzy matching, but do not verify the result.

Hence Foobar can be translated to Foobar. which feels very wrong if it’s a UI string of a button.

You might need some tooling to check that, or make sure your translation agency verifies that. Or both.

I wrote some tools to do a few simple checks like that on strings.xml, and I am planning open source them soon.

Once done, I will update this article, so feel free to bookmark this URL and come back later if interested.

Different casing

Book now!andBOOK NOW!are different translation units.

Again, the TM can find it, but it’s not ideal to have a mismatch in each platform.

Which one is better? The opinions are split. Non-uppercased version is theoretically better, because it’s trivial to uppercase if needed, whereas reverse is not true: you can not easily lowercase a string in a correct way (particularly in German, where nouns are always capitalized). However, there are reports stating that uppercasing at runtime in Android can lead to dropped frames while scrolling, so you need to decide yourself which trade-off to make.

Placeholders

As stated in BP1, you should use placeholders. You do? Good.

The strings with placeholders are most difficult to translate, hence the most important to reuse if they are already translated, but it would be too easy if it was straightforward.

Android uses placeholders like %d, %s, %1$s, %2$s; iOS uses %@, %1$@, %2$@,

and various 3rd-party libraries offer different syntaxes.

It might be reasonable to find a set of libraries (or write yourself if necessary) that will allow the exact same syntax for all platforms. Syntax from Phrase looks nice. However the problem is that in case of named placeholders, each team might use a different placeholder name and we’re in even worse position than before unless both teams cooperate.

Another solution (if you use %d/%@ etc.) could be to normalize placeholders

at the time when you export the strings for translation. For example: after exporting

XLIFF from Xcode, search-and-replace %@ to %s; send the replaced XLIFF to the translators;

when it comes back, reverse the replacements.

One more Android caveat: in strings.xml, you can wrap your placeholder with an XLIFF

tag to facilitate the work to a (knowledgeable) translator: Book a flight to <xliff:g example="London">%s</xliff:g>,

and those XLIFF tags will be discarded at compilation time. While in theory it sounds great, in practice

it nearly guarantees that the translation memory won’t match this string with an existing string in its database

(i.e. string from iOS won’t be reused). Yet another trade-off to make.

Plurals

While it’s hard with placeholders, it’s even harder with plurals, because pluralization rules differ between each language, and of course each platform or library handles it differently.

Android’s implementation seems very good to me: one string per quantity-indicator, grouped together. Some libraries like messageformat encode the quantity-selection logic into just one string, which in my opinion is not the most readable nor easiest for the translation agencies, but often they work the way they do due to technical limitations of their ecosystem.

Translation reuse for plurals is hard to achieve. It’s probably just easiest to translate those separately on each platform, or to make UI decisions that remove the need for pluralization altogether.

BTW: In our Android app, we moved all <plurals> to the very end of strings.xml so that they are not mixed with

the regular strings.

Process-related impediments

One non-obvious pain point we only learnt about at the last moment was a simple fact that the UI strings have diverged between the platforms.

While we were working on the Android app, quite a few the UI strings in the iOS app have changed, but were not updated in the Android codebase. When it came to translating Android, it turned out that many strings were simply not there in the iOS app anymore, and it took a while to find the appropriate strings in the iOS app and update the Android sources.

Corollary

Proper translation reuse is only possible with a strong cooperation from all the teams. In practice, this is hard to achieve. Aim for the best, but assume that it will just not happen, and you’ll achieve a reuse of something like 70-80%.